Michael Liendo, Pieter van Veen. (2021)

For the minor in smart manufacturing and robotics, an android application was developed that is able to recognise the ball from different ball games. We had limited time for this project because we started it after the aggravated covid lockdown had taken effect. This meant that we had to say goodbye to our previous project and start a new one within a short time. It was quickly decided that we would work with an app that would detect an object. This was chosen so that experience could be gained in making applications for android phones, and also knowledge could be gained regarding Machine Learning. In the end, we chose to scan different types of sports balls because we could easily get hold of them.

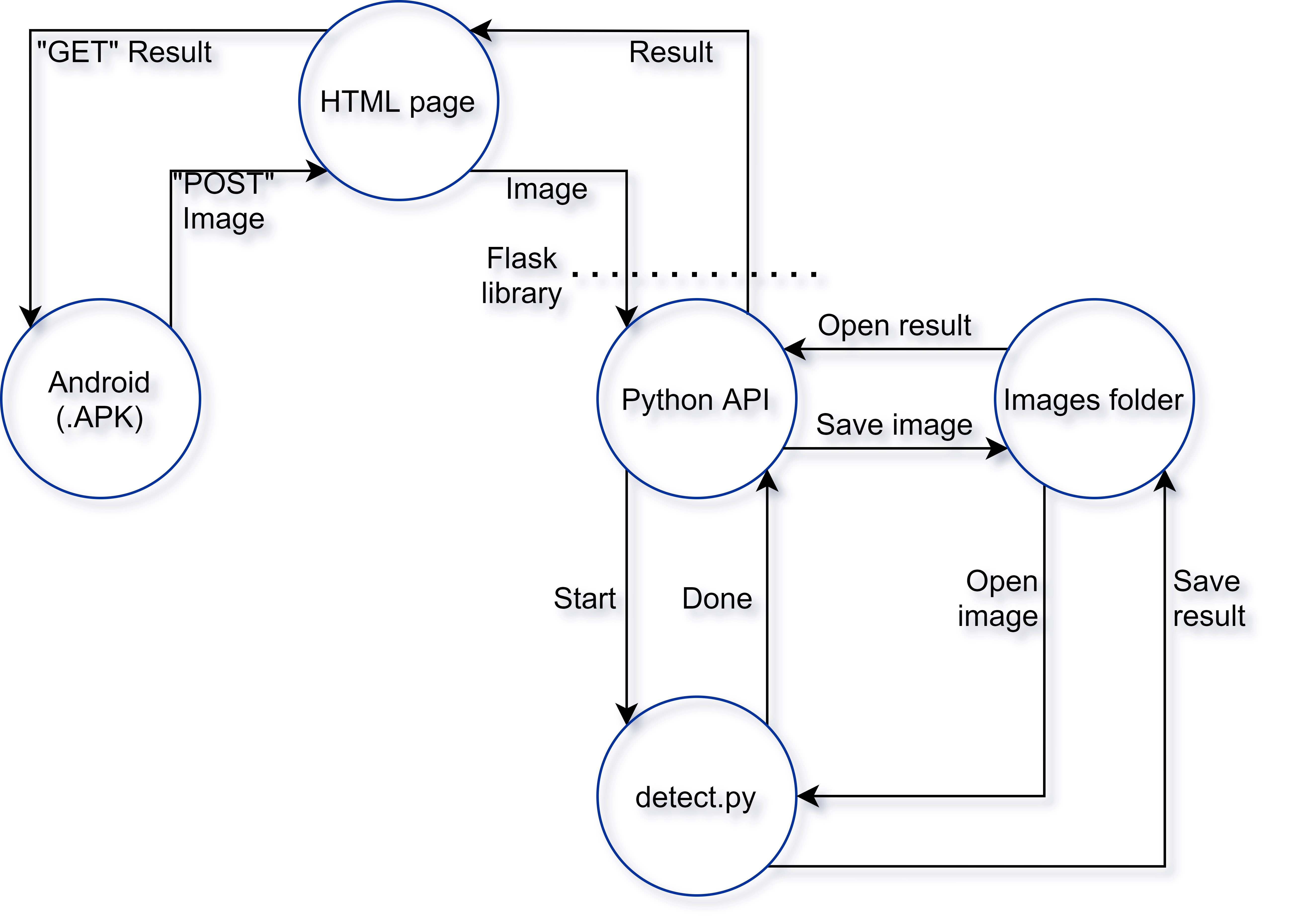

Data architecture

The biggest challenge in this project was the data infrastructure. This was because we wanted to produce a native android application. The final setup is as follows: The application takes a picture, and this picture is sent to an API server. On this API server, the photo is saved and an object detection program is run. This object detection program recognises the different balls. The result of this detection is sent back to the application where it is then displayed. The various components will be briefly explained in more detail later on

Figure 1: Data architecture

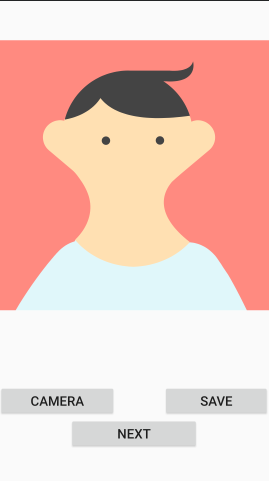

The app

This app user interface was built with three screens.

The first one gave an introduction to the app.

The second screen was built with the thought of giving the user, the possibility to take different pictures by clicking on the camera button and save them into the gallery of the phone by clicking on the save button.

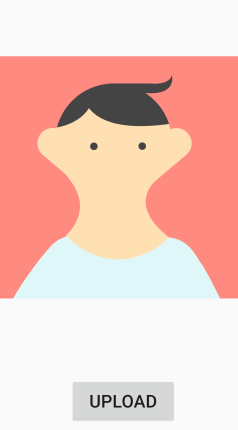

The third screen was specially built to make it possible to send and receive a picture. By pressing the upload button. The chosen picture would be sent to a flask server, where the picture would be processed and send back to the app.

The process of sending an image to the server is made possible by a library called Retrofit. This help make POST and GET request that the server can understand.

Figure 2: App Home screen, camera screen, and upload screen

The API

The API (Application Programming Interface) is waiting for a request from the app. When this request is made and the app sends an image, the API runs the detection program. The result of the detection program is sent back to the app. The communication between app and API is done using HTML. The HTML communication is made possible by the Flask Library. This library takes care of processing the HTML “GET” and “POST” messages.

figure 3: API running

The detection program

The detection program has been created with the help of YOLOv5. YOLO v5 is a single-stage object detector. It is already created and only needs to be trained with our own dataset. The training is done in a Google Collab notebook. In this notebook a code can be run on an external more powerful GPU. This was chosen because training demands a lot from a GPU. The final weights were obtained by training the dataset for 3000 epochs. This means that the training code has found a route 3000 times to recognise certain balls, making it very accurate.

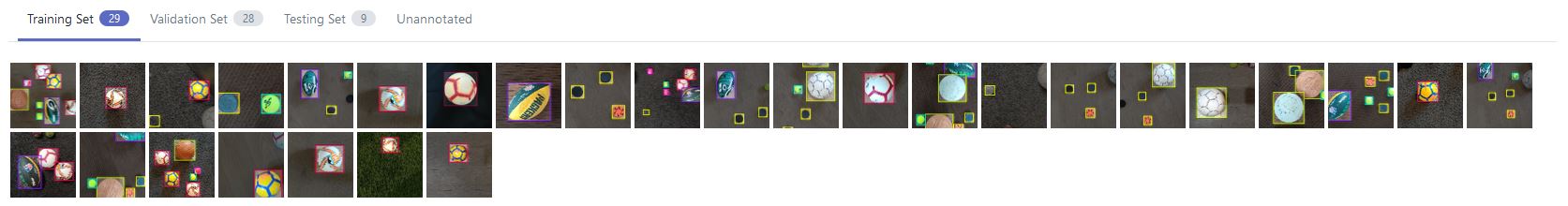

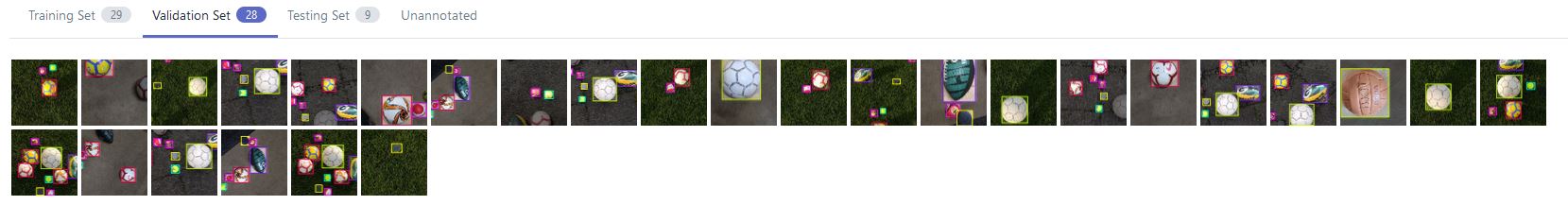

The dataset

The object detection can be trained using a custom dataset, which in this case is configured in the roboflow application. The dataset consists of test, training and validation images. In total the dataset consists of 66 images, but by using image augmentation this number is increased to 124 images. In these images, the different balls are framed for the Machine Learning to recognise connections between pixels and thus learn what the corresponding balls look like. In the end, there are 6 different types of sports balls in the dataset: tennis balls, hockey pucks, mini footballs, football balls (including a retro ball), baseballs and a rugby ball.

Figure 4: The dataset