Elmer Russchen, Jaime Cobos. (2021)

Google Colab notebook

GitHub repository

The problem

The original project for DERO was to pick a variety of types of bread out of a crate and place them on a conveyor belt in the correct orientation. This had to be done at a high speed of at least 40 bread rolls per minute. Due to COVID-19, work could no longer be done with a physical robot. Therefore the scope of the project was limited to the detection of a single type of bread roll in an image obtained from a camera. To achieve this, a deep learning neural network was trained and used to classify and detect the location and orientation of bread rolls in top down images.

The Solution

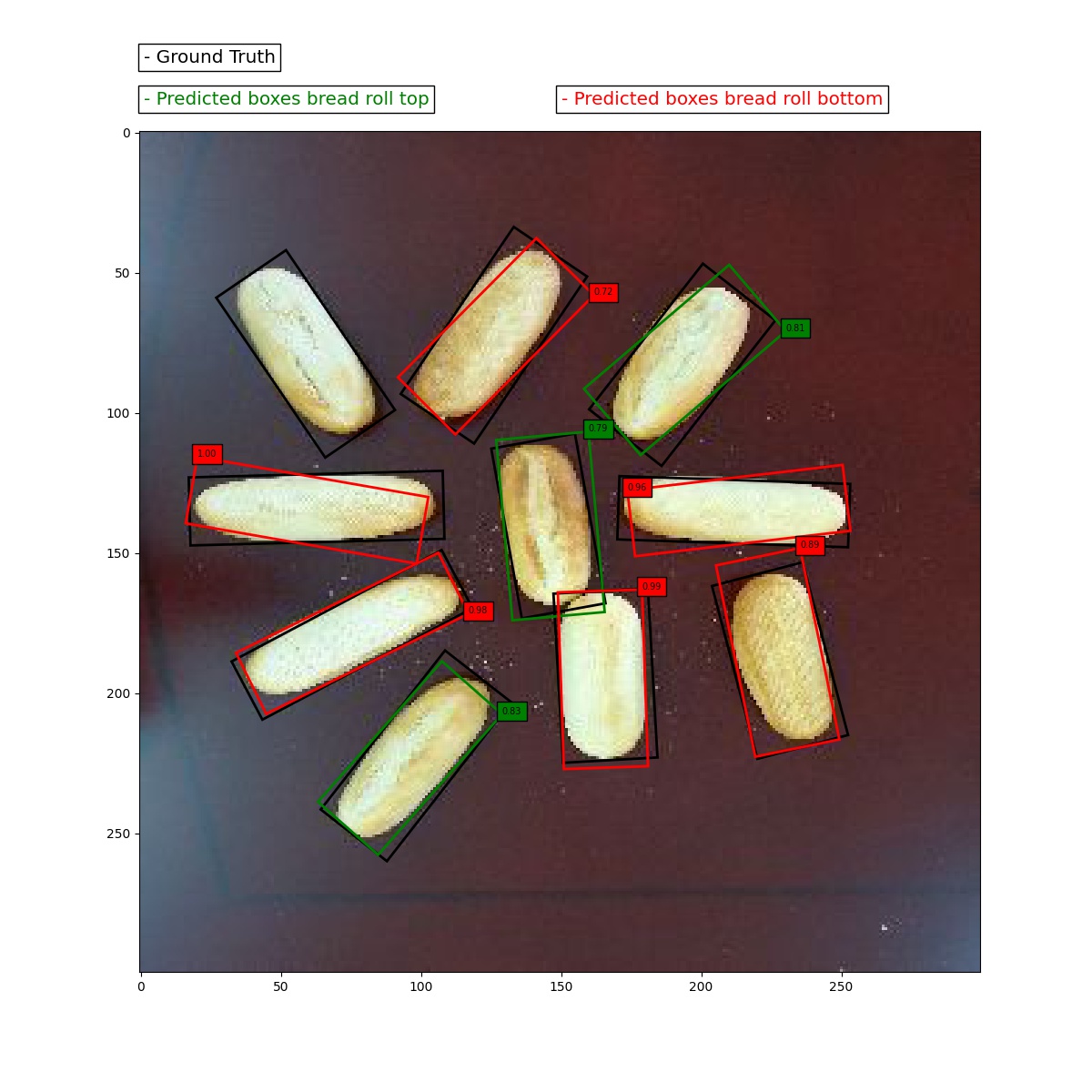

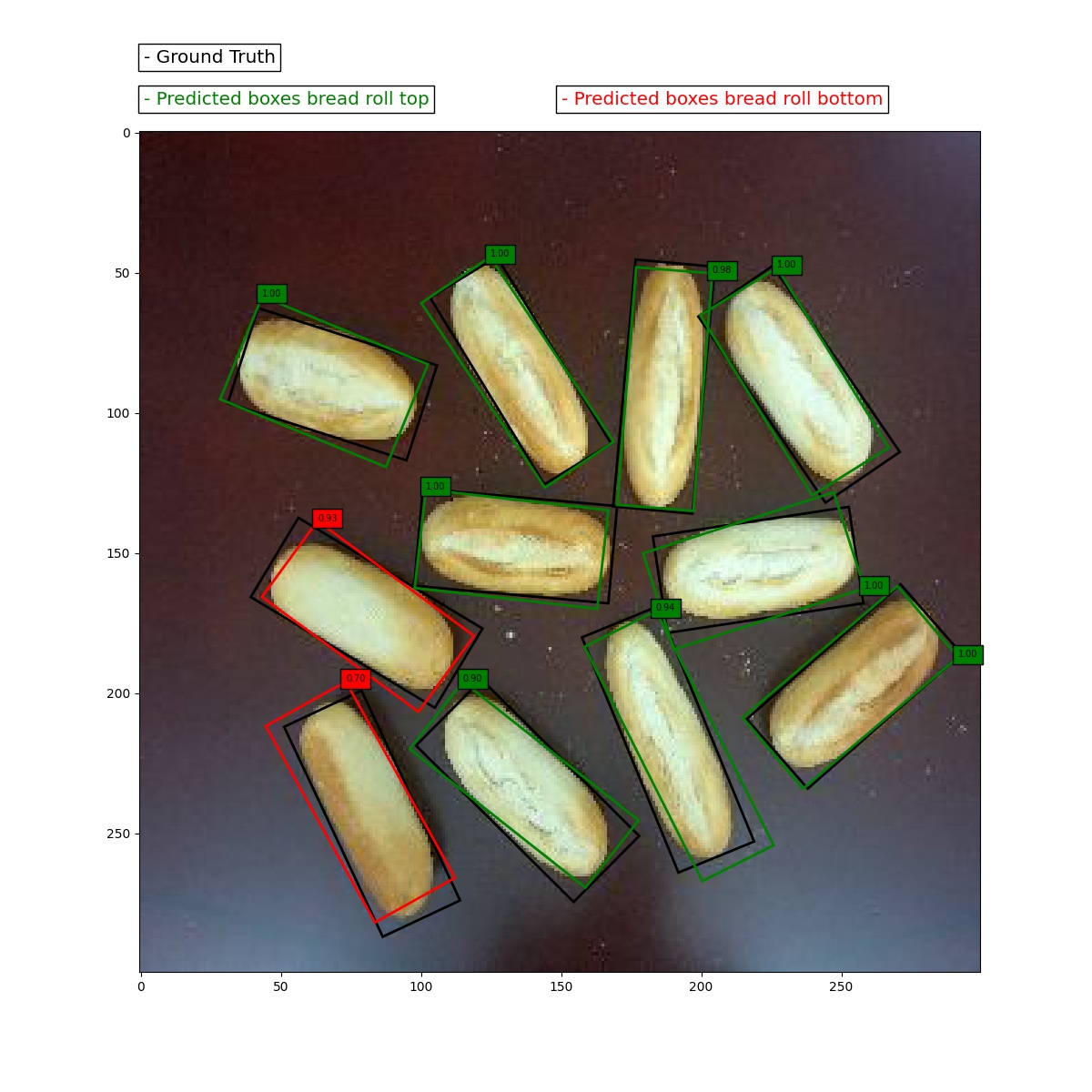

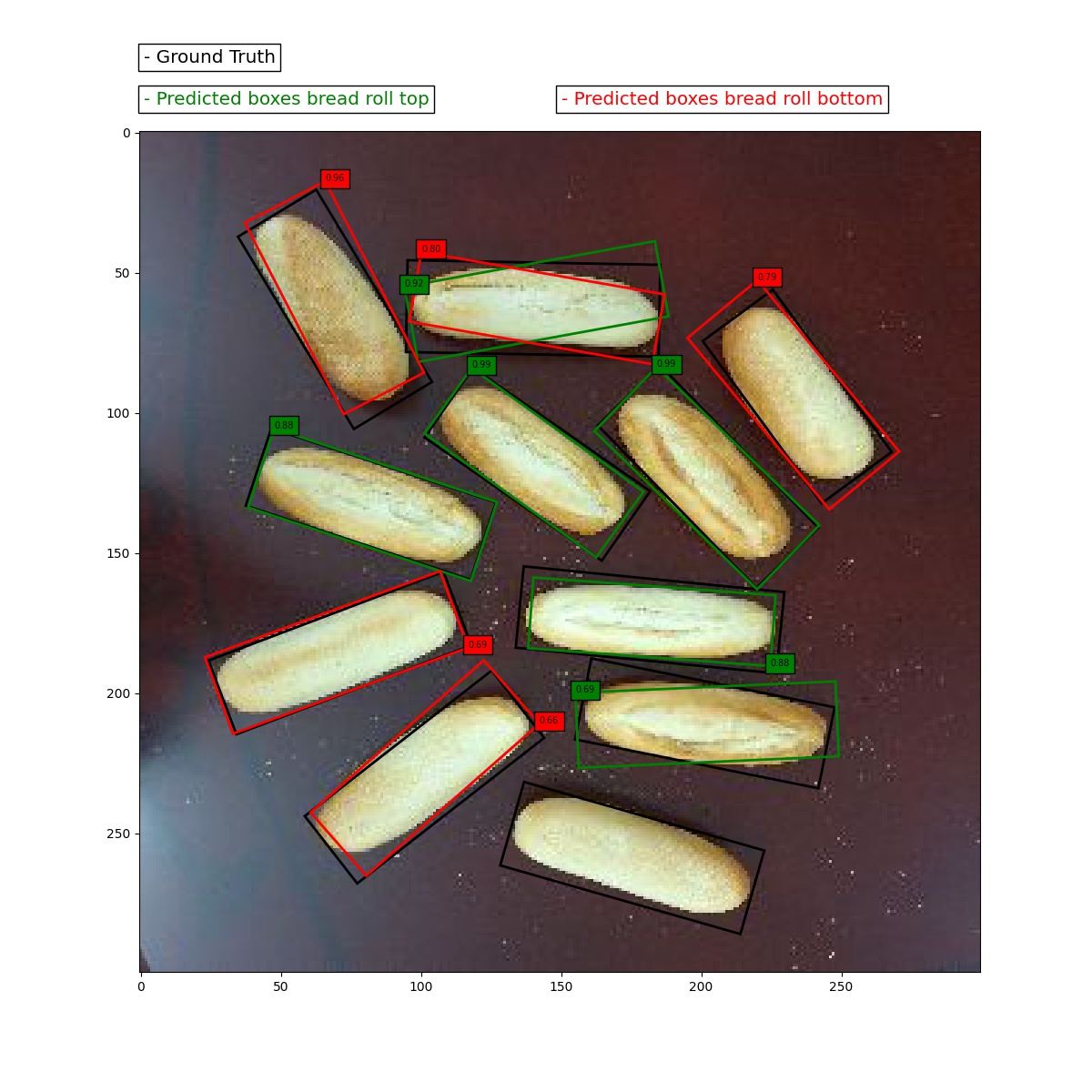

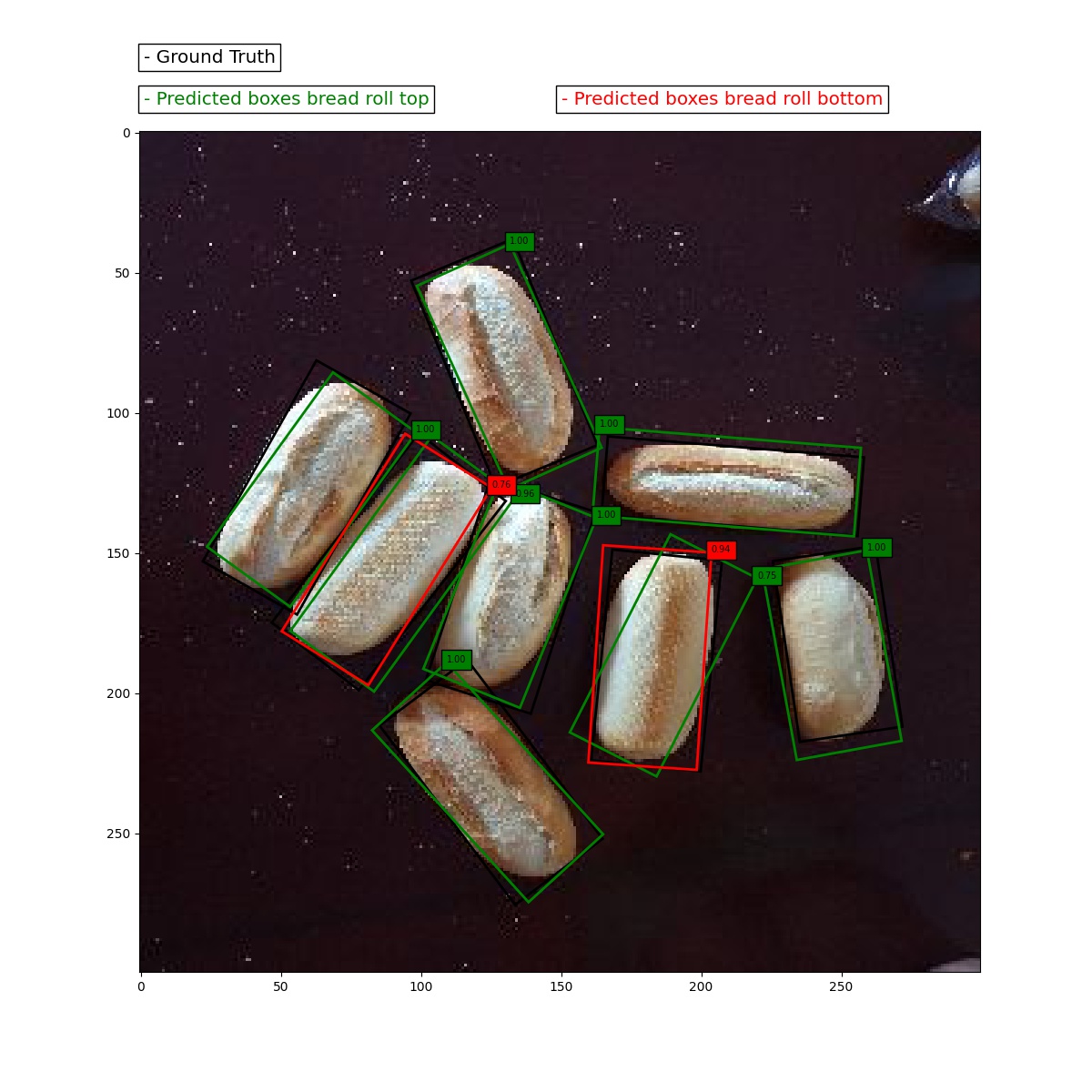

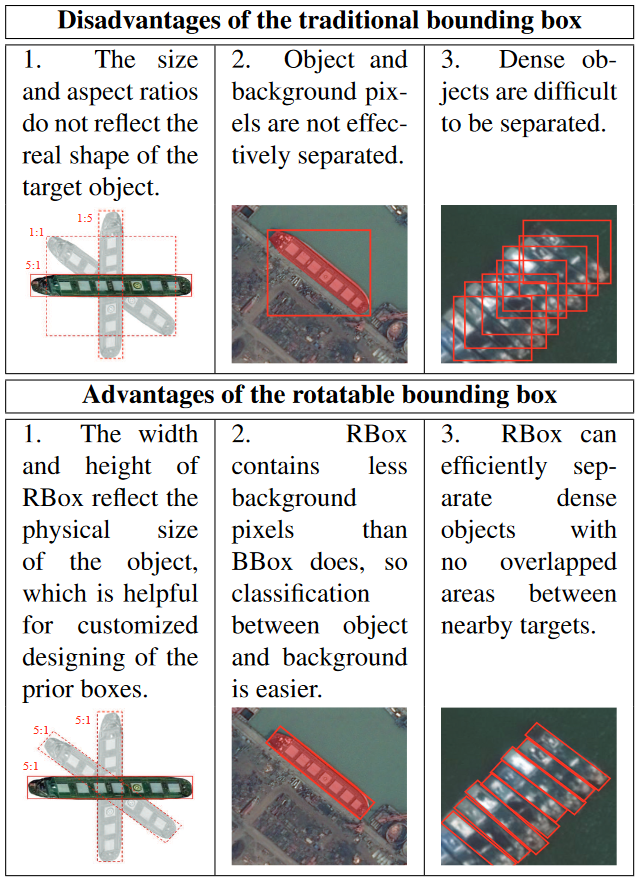

Object detection using machine learning is nothing new. However, the detection of arbitrarily rotated objects is still a challenging task due to many existing methods only using the traditional bounding box. These methods simply draw a rectangle around detected objects which does not take into account the angle that the object is oriented in. Instead of a traditional bounding box, a rotated bounding box is necessary to obtain the angle of an object. A comparison of the two can be seen in the figure below.

One such method to detect arbitrarily rotated objects is DRBox. This algorithm was originally designed to detect objects such as ships, vehicles or airplanes in remote sensing images . In this project we decided to attempt to use a Keras implementation of DRBox written by Paul Pontisso of Thales Group to detect and classify bread rolls based on their orientation. [2]

Dataset

A training dataset had to be created in the form of pictures (JPG-format). The pictures were taken with a camera from a top down view at a distance of 1 meter. This resembles the original problem’s situation where a crate of bread is emptied onto a flat plane from which the robot can then pick and place the bread rolls.

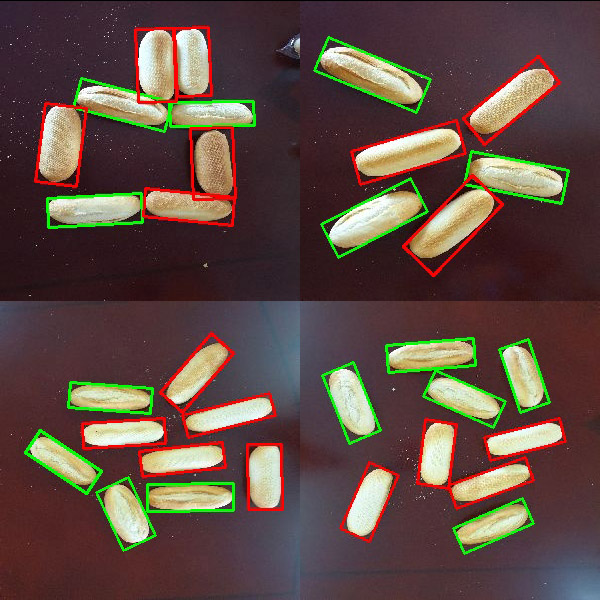

To be able to use the images for training, all the images first had to be labeled by hand. The labeling of the images was done using roLabelImg. roLabelImg is a graphical image annotation tool that can label rotated rectangle regions. The bread rolls were classified into two separate categories: Bread rolls with their top side up and bread rolls with their bottom side up. A total of 340 images were taken containing over 3000 labeled bread rolls.

Results

The neural network was trained in the cloud using Google Colab and achieved an average precision of 96,8 at an intersection of union threshold with the ground truth of at least 0,5. Processing times for inference and decoding the detections using non-maximum suppression were less than a second using the CPU.

Conclusion

Deep learning algorithms such as DRBox can successfully be used to detect arbitrarily rotated bread rolls and could potentially be used in a high speed pick and place environment. A lower inference and decoding time could be achieved by using a GPU and faster CPU. Up to 80 fps has been reached with DRBox using a GTX 1080Ti and an Intel Core i7 processor. [1] An even higher and more consistent average precision can likely be achieved by using a more diverse and larger dataset.

References

[1] Liu, L., Pan, Z., Lei, B., Learning a rotation invariant detector with rotatable bounding box. arXiv preprint arXiv:1711.09405 (2017)

[2] P. Pontisso, DRBox : Detector of rotatable bounding boxes implementation in Keras. GitHub repository, https://github.com/ThalesGroup/DRBox_keras (2019)